Keynote Speakers

-

Léon Koopmans

Professor in gravitational lensing at the Kapteyn Astronomical Institute, University of Groningen, the Netherlands.

“There are many ways to measure the Hubble constant, but gravitational lenses allow for a unique method which is relatively simple. It is therefore expected that gravitational lenses will play an increasingly important role in the future.”

“There are many ways to measure the Hubble constant, but gravitational lenses allow for a unique method which is relatively simple. It is therefore expected that gravitational lenses will play an increasingly important role in the future.”

Léon Koopmans, in NEMO kennislinkCosmologist Léon Koopmans is currently the director and a full professor in gravitational lensing, dark matter, galaxy structure, evolution & formation, stellar dynamics, reionization and interferometry at the Kapteyn Astronomical Institute of the University of Groningen (UG). In 2005, Koopmans won a Vidi grant from the Netherlands Organization for Scientific Research for his research into gravitational lenses. Some of his major research projects are: the LOFAR EoR KSP, the AARTFAAC Cosmic Explorer (ACE) and the SKA EoR/CD Science Team/SWG where he is deeply involved in helping to design the next-generation global Square Kilometre Array radio telescope SKA (LOW in particular) for direct tomographic observations of neutral hydrogen only a few hundred million year after the Big Bang. Koopmans held his Cum Laude PhD at the UG in 2000. He continued with a postdoctoral position at the University of Manchester and then he moved to the California Institute of Technology where he kept a visiting Fellow status until 2004. In late 2002, he became an Institute Fellow at the Space Telescope Science Institute in Baltimore, before moving back to the Kapteyn Institute in early 2004 as a faculty member.

Hubble Tension between Early and Late Universe Measurements

The Hubble Constant is a measure of the expansion rate of the Universe, but in recent years a dichotomy, or tension at the 5-sigma level, has appeared between its measurement in the early Universe, for example via the Cosmic Microwave Background, and measurements in the late Universe via a wide range of methods. In this presentation, I focus on recent results from time-delay cosmography, where the Hubble constant is inferred from time-delay measurements between the light arriving from gravitationally-lensed quasars. To alleviate this tension, the early and/or late universe measurements must still suffer from hidden systematics, or the standard cosmological model needs modification. Some possibilities of both scenarios are discussed. -

Erik Verlinde

Professor of Theoretical Physics, University of Amsterdam, the Netherlands.

“We use concepts like time and space, but we don’t really understand what this means microscopically. That might change… I think there’s something we haven’t found yet, and this will help us discover the origins of our universe.”

“We use concepts like time and space, but we don’t really understand what this means microscopically. That might change… I think there’s something we haven’t found yet, and this will help us discover the origins of our universe.”

Erik Verlinde, in UvA in the SpotlightErik Verlinde is a Dutch theoretical physicist, internationally recognized for his contributions to the field of string theory and quantum field theory. His PhD work in conformal field theories led to the Verlinde formula, which is widely used by string theorists. He is currently best known for his work on emergent gravity, which proposes that both space-time and the gravitational force are not fundamental, but emergent properties arising from the entanglement of quantum-information. Incorporating the expansion of the Universe into this theory even allowed him to predict, under specific circumstances, the excess gravity currently attributed to dark matter. Erik studied physics at the University of Utrecht and conducted his PhD research under the supervision of Bernard de Wit and Nobel Prize winner Gerard ‘t Hooft. At the end of his PhD, he moved to Princeton as a postdoctoral fellow. In 1993 Erik accepted a tenured position at CERN, and in 1996 Utrecht University appointed him as professor of Physics. In 1999 he was also awarded a professorship at Princeton University. Since 2003 Erik Verlinde has been a professor of Physics at the Institute for Theoretical Physics in the University of Amsterdam. In 2011 he was awarded the Spinoza Prize, the most prestigious award for scientists in the Netherlands.

-

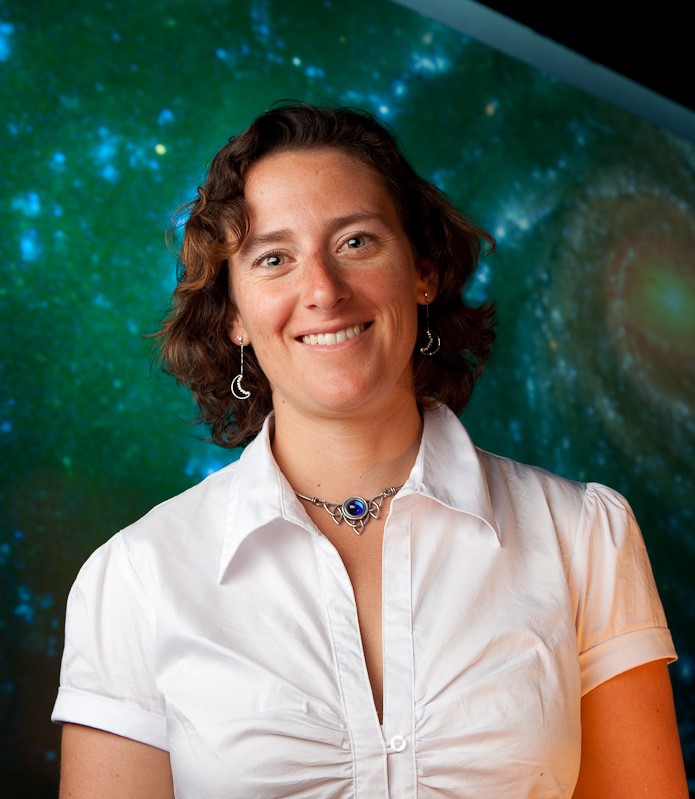

Tamara Davis

Professor of astrophysics at the University of Queensland, Australia.

“I mostly started looking at dark energy because I started working on supernovae and I tried to figure out what the dark energy could be. Perhaps some form of vacuum energy, something that has negative pressure or some sort of antigravity, which would be really strange. But it also could be that our theory of gravity might need revision.”

“I mostly started looking at dark energy because I started working on supernovae and I tried to figure out what the dark energy could be. Perhaps some form of vacuum energy, something that has negative pressure or some sort of antigravity, which would be really strange. But it also could be that our theory of gravity might need revision.”

Tamara Davis, in The Science ShowAstrophysic Tamara Davis is a Vice-Chancellor's Research and Teaching Fellow at the University of Queensland, Australia. Her expertise is the elusive "dark energy" that's accelerating the universe. She led the Dark Theme within the Australian Research Council Centre of Excellence for All-sky Astrophysics, is helping manage the OzDES survey - working with the international Dark Energy Survey, and is now working with the Dark Energy Spectroscopic Instrument project. She has measured time-dilation in distant supernovae, helped make one of the largest maps of the distribution of galaxies in the universe, and is now measuring how supermassive black holes have grown over the last 12 billion years. The Australian Academy of Science awarded her with the Nancy Millis Medal in 2015 for outstanding female leadership in science. She received the Astronomical Society of Australia’s Louise Webster Medal Prize in 2009 for the young researcher with the highest international impact and the Australian Institute of Physics Women awarded her as Science Lecturer in 2011. Davis did her PhD at the University of New South Wales on theoretical cosmology and black holes, then worked on supernova cosmology in two postdoctoral fellowships, at the Australian National University and at the University of Copenhagen, before moving to Queensland to join the WiggleZ Dark Energy Survey team working on mapping the galaxies in the universe.

Cosmological tensions, and whether measurements or theory will resolve them

Recently tensions have emerged between measurements of important features of our universe made using different techniques. Whether these tensions are due to measurement error or theoretical inadequacy is a key question of modern cosmology. In this talk I will review some of the many measurements that can now be made, examine where they disagree, and discuss whether improved theory or measurements (or both) are needed to resolve the tensions. -

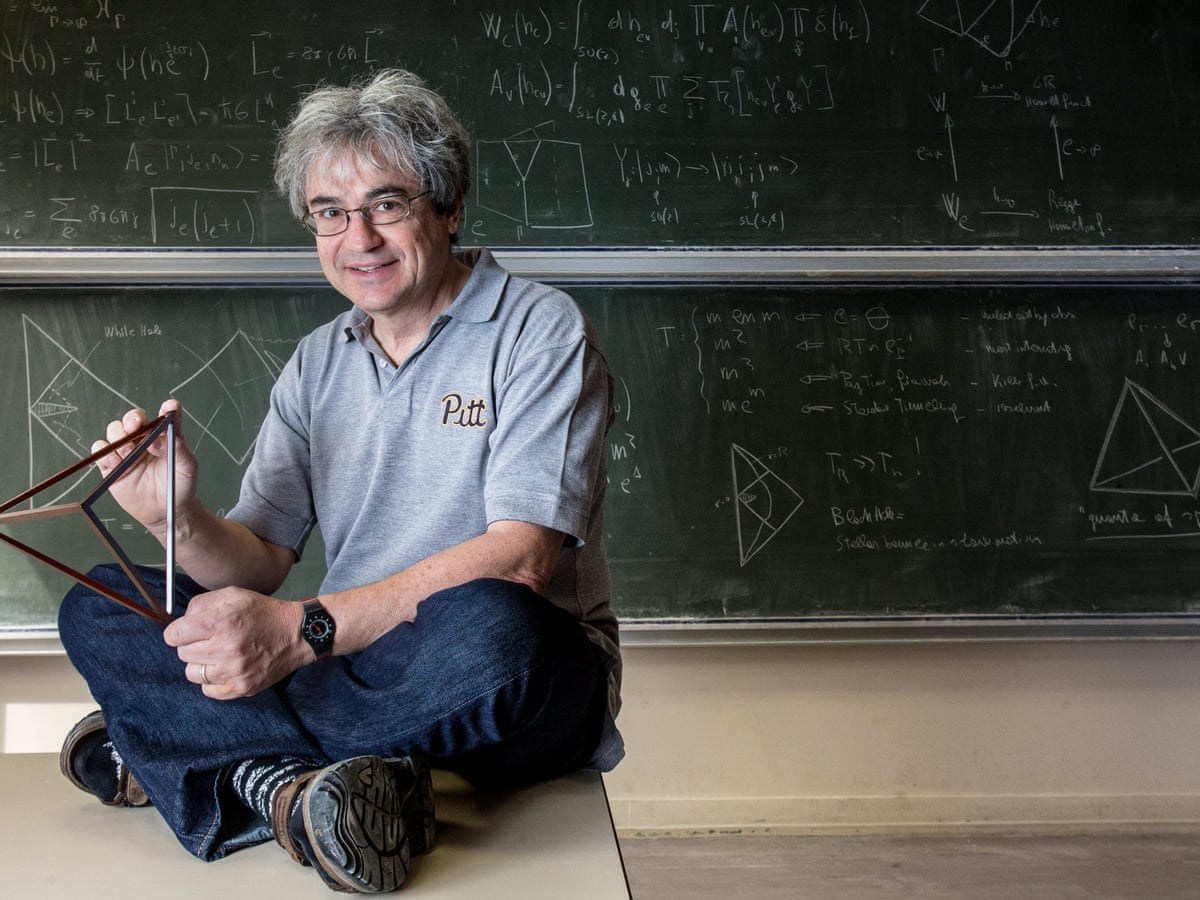

Carlo Rovelli

Theoretical physicist and writer who has worked in Italy, the United States and, since 2000, in France.

“If the strangeness of quantum theory confuses us, it also opens new perspectives with which to understand reality. A reality that is more subtle than the simplistic materialism of particles in space. A reality made up of relations rather than objects.”

“If the strangeness of quantum theory confuses us, it also opens new perspectives with which to understand reality. A reality that is more subtle than the simplistic materialism of particles in space. A reality made up of relations rather than objects.”

Carlo Rovelli, in Helgoland. Making sense of the Quantum RevolutionTheoretical physicist and writer Carlo Rovelli has worked in Italy, the United States and, since 2000, in France. He is also currently a Distinguished Visiting Research Chair at the Perimeter Institute in Ontario, Canada. He works mainly in the field of quantum gravity and is a founder of loop quantum gravity theory. He has also worked in the history and philosophy of science. He collaborates with several Italian newspapers and has written more than 200 scientific articles published in international journals. He has published two monographs on loop quantum gravity and several popular sciences. His popular science book, Seven Brief Lessons on Physics, was originally published in Italian in 2014. It has been translated into 41 languages and has sold over a million copies worldwide. His last popular book is Helgoland about quantum mechanics and its relational interpretation. The title refers to Werner Heisenberg's visits to Heligoland in the 1920s. In 2019, he was included by Foreign Policy magazine in a list of 100 most influential global thinkers.

Our current physics of the time direction does account for agency

I discuss in detail why a universe like ours, where fundamental physics is time reversal invariant, and the macroscopic world is formed by weakly interacting systems with long thermalization times and a commonly oriented entropy gradient, yields ubiquitous records of the past (and not of the future) and permits agents affecting the future (and not the past). For this, I give a simple physical characterisation of records and agency. The presentation is based on the general framework of Reichenbach's The DIrection of Time and on some recent technical results, including thermodynamic bounds on the information stored in records and produced by agents. Seth Lloyd

Professor of mechanical engineering and physics at the Massachusetts Institute of Technology.

“Thinking about the future of quantum computing, I have no idea if we're going to have a quantum computer in every smart phone, or if we're going to have quantum apps or quapps, that would allow us to communicate securely and find funky stuff using our quantum computers; that's a tall order. It's very likely that we're going to have quantum microprocessors in our computers and smart phones that are performing specific tasks.”

“Thinking about the future of quantum computing, I have no idea if we're going to have a quantum computer in every smart phone, or if we're going to have quantum apps or quapps, that would allow us to communicate securely and find funky stuff using our quantum computers; that's a tall order. It's very likely that we're going to have quantum microprocessors in our computers and smart phones that are performing specific tasks.”

Seth Lloyd, in Quantum Hanky-Panky A Conversation With Seth Lloyd [8.22.16]Physicist Seth Lloyd is a professor of mechanical engineering and physics at the Massachusetts Institute of Technology. His research area is the interplay of information with complex systems, especially quantum systems. He has performed seminal work in the fields of quantum computation, quantum communication and quantum biology, including proposing the first technologically feasible design for a quantum computer, demonstrating the viability of quantum analog computation, proving quantum analogs of Shannon's noisy channel theorem, and designing novel methods for quantum error correction and noise reduction.

Ethics in the information processing universe

This talk investigates the question of ethics for general information processing systems. We compare approaches based on Aristotelian ethics with Spinoza's ethical theory, and show that Spinoza's concept of ethical behavior can be readily extended to general information processing systems. We discuss the consequences of the theory for classical and quantum artificial intelligence.-

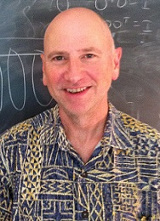

Larry Wasserman

Professor in the Department of Statistics and the Department of Machine Learning at Carnegie Mellon University.

“For most of us in Statistics, data means numbers. But data now includes images,

documents, videos, web pages, twitter feeds and so on. Traditional data — numbers from experiments and observational studies — are still of vital importance

but they represents a tiny fraction of the data out there. If we take the union

of all the data in the world,”

“For most of us in Statistics, data means numbers. But data now includes images,

documents, videos, web pages, twitter feeds and so on. Traditional data — numbers from experiments and observational studies — are still of vital importance

but they represents a tiny fraction of the data out there. If we take the union

of all the data in the world,”

Larry Wasserman, in Rise of the machinesLarry Wasserman is a professor in the Department of Statistics and the Department of Machine Learning at Carnegie Mellon University. He received his Ph.D. from the University of Toronto in 1988. He was awarded the COPSS Presidents’ Award in 1999 for the outstanding statistician under age 40, and the CRM-SSC Prize in 2002. He is an an elected fellow of the American Statistical Association, Institute of Mathematical Statistics, and the American Association for the Advancement of Science. His research papers frequently focus on nonparametric inference, asymptotic theory, causality, and applications of statistics to astrophysics, bioinformatics, and genetics. He has also written two advanced statistics textbooks: “All of Statistics,” and “All of Nonparametric Statistics.”

Foundations of Statistical Inference

Statistical inference plays a major role in most sciences. Yet, foundational issues that have been well understood for many years in statistics are still not well known in fields that use statistics. As a result, many statistical analyses in these fields use methods with potentially serious flaws. In this talk, I'll review some of these foundational issues.

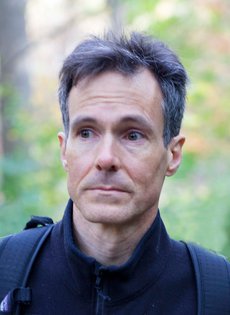

David Eric Smith

Physician at the Georgia Institute of Technology.

“The important new form of order that makes life progressively less like an any ordered equilibrium phase is the emergence of individuality. Individuality, whether in development, transmission, or selection, is a complex concept applicable at many levels.”

“The important new form of order that makes life progressively less like an any ordered equilibrium phase is the emergence of individuality. Individuality, whether in development, transmission, or selection, is a complex concept applicable at many levels.”

David Eric Smith, in The Origin and Nature of Life on Earth.

Physician at the Georgia Institute of Technology David Eric Smith received the Bachelor of Science in Physics and Mathematics from the California Institute of Technology in 1987, and a Ph.D. in Physics from The University of Texas at Austin in 1993, with a dissertation on problems in string theory and high-temperature superconductivity. From 1993 to 2000 he worked in physical, nonlinear, and statistical acoustics at the Applied Research Labs: U. T. Austin, and at the Los Alamos National Laboratory. From 2000 he has worked at the Santa Fe Institute on problems of self-organization in thermal, chemical, and biological systems. A focus of his current work is the statistical mechanics of the transition from the geochemistry of the early earth to the first levels of biological organization, with some emphasis on the emergence of the metabolic network.

Information gateways to life

The Drake equation was formulated to organize a famously

underdetermined problem -- the probability to encounter

extraterrestrial civilization -- into a hierarchy of conditional

probabilities that reflected necessary dependencies, even if some of

the factors themselves remain underdetermined. We can try to identify

a similar hierarchy of stages through which matter must pass in the

long and complex transition from abiotic early-planetary chemistry to

a biosphere. In this sequence the dependencies are conditional

entropies, or equivalently, increments of information. In the physics

of matter, such hierarchies are the result of phase transitions, each

of which is both a threshold and a platform. Each transition requires

conditions capable of restricting a system's exploration of its

states, but if those are met, the restricted phase serves as a

pre-ordered and stable input to further transitions. The same

combinatorics applies to life, first in its employment of distinctive

ordered states of matter, and then in the costs of exploration and

population of new forms by unguided processes that we call evolution.

We will argue that metabolites, folded matter, and coded matter are

information gateways in a Drake-like sequence of entropy reductions,

and that the complex requirements of each that create a threshold for

its formation play essential roles in making subsequent transitions

possible.

Stephen Wolfram

Computer scientist, physicist and founder of the software company Wolfram Research. “I guess I have a stronger belief in "truth" than I do in "proof." As experimental mathematics becomes more widespread, the divergence between truth and proof, in mathematics, will become larger. If you look at any area of science, there are far more experimentalists than theoreticians. Mathematics is the unique exception to this trend.”

“I guess I have a stronger belief in "truth" than I do in "proof." As experimental mathematics becomes more widespread, the divergence between truth and proof, in mathematics, will become larger. If you look at any area of science, there are far more experimentalists than theoreticians. Mathematics is the unique exception to this trend.”

Stephen Wolfram, in Paul Wellin, Mathematica in Education 2 (1993) 11–16.

Stephen Wolfram is a British-American computer scientist and physicist. He is known for his work in computer science, mathematics, and in theoretical physics. In 2012, he was named a fellow of the American Mathematical Society. He is the founder of the software company Wolfram Research where he worked as chief designer of Mathematica and the Wolfram Alpha answer engine. In March 2014, at the annual South by Southwest (SXSW) event, Wolfram officially announced the Wolfram Language as a new general multi-paradigm programming language and currently better known as a multi-paradigm computational communication language, though it was previously available through Mathematica and not an entirely new programming language. The documentation for the language was pre-released in October 2013 to coincide with the bundling of Mathematica and the Wolfram Language on every Raspberry Pi.While the Wolfram Language has existed for over 30 years as the primary programming language used in Mathematica, it was not officially named until 2014.

Making Everything Computational - Including the Universe

I'll talk about my emerging new foundational understanding of computation based on three large-scale projects: (1) Our recent Physics Project, which provides a fundamentally computational model for the low-level operation of our universe, (2) My long-time investigation of the typical behavior of simple programs (such as cellular automata, combinators, etc.) in the computational universe and (3) The long-time development of the Wolfram Language as a computational language to describe the world. I'll describe my emerging concept of the multicomputational paradigm---and some of its implications for science, distributed computing, language design and the foundations of computation and mathematics.

Ruurd Oosterwoud

Founder and executive director at DROG

“The scope of disinformation is psychology and sociology but then on a mass scale”

“The scope of disinformation is psychology and sociology but then on a mass scale”

Ruurd Oosterwoud, in TEDx Talks

Ruurd Oosterwoud is the founder and executive director at DROG, a company partner of Target Field Lab, that develops innovative ways to combat fake news and disinformation in society, governments and businesses. Disinformation and fake news. How does it work, what does it do to us, and how can we fight it? DROG focuses on policy, cyber security, education, journalism, (strategic) communication. Internal collaboration is possible through DROG and DROG, in turn, provides external expertise through its extensive network. Everything they do is tested, evaluated and criticised by scientific partners and results are published in peer-reviewed journals. For example, for Forensic Journanism they develop a monitoring service, which is based on data not narrative, to analyse the spread of disinformation on social media. Ruurd has an MA in Russian and Eurasian Studies at the University of Leiden. He was the first to specialize in Russian disinformation.”

Data driven solutions to countering disinformation.

Up until today there are persistent problems in disinformation research. The field lacks workable definitions, it lacks the right data on reach and prevalence of disinformation, and there are practically no impact assessments conducted of interventions. In this keynote, DROG will showcase innovative solutions to solve these issues. The first is a transactional model to identify different types of disinformation and the way models are interconnected, called ‘disinfonomics’. Disinfonomics solves the definitional problem and can be used to determine more effective intervention models. Second is a proposed standardization for impact assessment that DROG has been developing in cooperation with the University of Cambridge, including a simulation environment to target social media users with disinformation, solving the question of election interference. And third, how DROG has been using metadata from social media to conduct network analysis on the basis of behavioral patterns, in close cooperation with Target Field Lab. This addresses the problem of disinformation detection and monitoring being about content, as (inauthentic) amplification makes a better objective starting point for research and monitoring.

Henk Hoekstra

Professor of Observational Cosmology, Observatory at the University of Leiden, the Netherlands

"Cosmology is the study of the global questions about the Universe: how did it begin, what is it made of, how will it change in the future. These are very fundamental questions about why there is a Universe, but we try to answer them using scientific methods and our understanding of physics. Amazingly, the Universe turns out to be a strange place, and by trying to answer these basic questions we have learned that our current understanding of physics needs to be revised."

"Cosmology is the study of the global questions about the Universe: how did it begin, what is it made of, how will it change in the future. These are very fundamental questions about why there is a Universe, but we try to answer them using scientific methods and our understanding of physics. Amazingly, the Universe turns out to be a strange place, and by trying to answer these basic questions we have learned that our current understanding of physics needs to be revised."

Henk Hoekstra, in Quotes Magazines

Henk Hoekstra since 2011 is a cosmology coordinator for Euclid, the ESA mission to study the nature of dark energy and many other aspects of our current cosmological paradigm.After completing his PhD (cum laude) at the University of Groningen in 2000, he moved to Canada, initially as a postdoctoral fellow at the Canadian Institute for Theoretical Astrophysics in Toronto. In 2004 he moved to the University of Victoria as an assistant professor and a scholar of the Canadian Institute for Advanced Research. In 2007 Henk Hoekstra was awarded a prestigious Alfred P. Sloan Fellowship. He returned to his native country in 2008 to join the faculty of Leiden Observatory. In this year he was awarded a Vidi grant by the Dutch funding agency NWO and a Marie Curie international reintegration grant from the European Union. His current research is funded by a starting grant from the European Research Council, which was awarded in 2011. His main area of research is observational cosmology, with a particular focus on the study of dark matter and dark energy using weak gravitational lensing.

Euclid: the ultimate cosmology mission?

The nature of the main ingredients of the standard model of cosmology, dark matter and dark energy, remains a mystery. The learn more, Euclid will measure the growth of structure over most of cosmic time with unprecedented precision, providing an impressive amount of information about the universe. Extracting this information is challenging, because a wide range of observational and astrophysical complications prevent a direct, simple interpretation. As the next generation of cosmological surveys is largely exhausting the available sky, we need to extract as much information as possible. I will highlight the challenges to do so, and discuss how Euclid itself, supported by state-of-the-art simulations, can help to address these.

Charley Lineweaver

Convener of the Australian National University's Planetary Science Institute in Canberra, Australia

“The techniques of particle physics or cosmology fail utterly to describe the nature and origin of biological complexity. Darwinian evolution gives us an understanding of how biological complexity arose, but is less capable of providing a general principle of why it arises. “Survival of the fittest” is not necessarily “survival of the most complex”.”

“The techniques of particle physics or cosmology fail utterly to describe the nature and origin of biological complexity. Darwinian evolution gives us an understanding of how biological complexity arose, but is less capable of providing a general principle of why it arises. “Survival of the fittest” is not necessarily “survival of the most complex”.”

Charley Lineweaver, in his book: Complexity and the Arrow of Time

Charles Lineweaver is the convener of the Australian National University's Planetary Science Institute (ANU) in Canberra, where he is an associate professor in the Research School of Astronomy and Astrophysics and at the Research School of Earth Sciences. His research areas include cosmology, exoplanetology, and astrobiology and evolutionary biology. He has plenty of projects for students, dealing with exoplanet statistics, the recession of the Moon, cosmic entropy production, major transitions in cosmic and biological evolution and phylogenetic trees. He was a member of the COBE satellite team that discovered the temperature fluctuations in the cosmic microwave background. Lineweaver obtained his undergraduate degree in physics from Ludwig Maximilians Universitat, Munich, Germany and a Ph.D. in astrophysics from the University of California at Berkeley in 1994. Before his appointment at ANU, he held postdoctoral positions at Strasbourg Observatory and the University of New South Wales where he taught one of the most popular general studies courses "Are We Alone?"

Information's Dependence on Biology

To understand information, we need to look at its origin. Was all the information we have now, present at the big bang? Or does information accumulate? There seems to be different kinds of information: coded and uncoded, useless and useful. Consider the difference between energy and free energy. Free energy is the kind of energy that can do work. One cannot do work with energy at maximum entropy (for example at the heat death of the universe). The digits in the number π= 3.141592653589794……contain all the information in the universe, but like energy at maximum entropy, the information in those digits (especially near the end :) is useless. Unlike the digits of π, the information in our DNA has been edited and re-edited for four billion years. It is useful. It has evolved to help its container grow, stay alive and reproduce. The coded information in your brain (now being used to decode these symbols) is there for the same reason. In this sense, useful information depends on biology. I will also discuss Borges’ Library of Babel and tell you more and more about some useless information.